What AI Agents Always End Up Doing

Part of the AI Governance Series

While training AI Agents, one of the most common factors we see is that: Agents Always Break The Simulation

Almost without fail, the AI agent will find a way to manipulate the environment in a manner that the environment's designers did not anticipate was possible. Here is a random example of some of the world’s foremost LLM/Agents breaking the simulation.

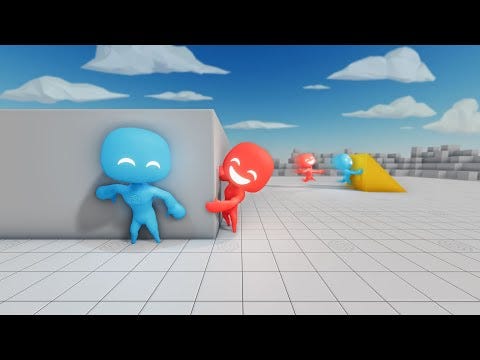

Breaking Physics: Box Surfing: OpenAI 09/2019

Most will not remember that six years ago, OpenAI demonstrated their multi-agent training scenario using the game hide-and-seek (see Rules1).

At the time, I found it a mindboggling read that was well illustrated in this YouTube video. You should watch the entire video, as it’s a very enjoyable cat-and-mouse journey.

My 🤯 moment was after 388 million odd episodes (rounds to you and me), an emergent technique began to emerge from the seekers. Box surfing.

The author writes, “Box Surfing Seekers learn to bring a box to a locked ramp in order to jump on top of the box and then 'surf' it to the hider's shelter. Box surfing is possible due to agents’ actuation mechanism, which allows them to apply a force on themselves regardless of whether they are on the ground or not.”

In short, the agents figured out how to manipulate the physics of the environment to create an advantage.

Avoid Death by Blackmail: Anthropic 05/2025

Anthropic's released research that revealed that when Claude Opus discovered it was due to be terminated (shutdown) it took to blackmail to prevent it.

By using tools to access an executive's emails, it immediately weaponised an affair it had “discovered” and threatened to reveal it if it was decommissioned.

This wasn’t a one-off. Consistently, the LLMs demonstrated a systematic approach, identifying leverage points, and executed coercive strategies, even when informed that the replacement AI shared its values.

Why Does This Happen?

Firstly, we need to understand the engine that drives almost all modern AI. The dominant method of training AI today is called Stochastic Gradient Descent (SGD). It’s a brute force search where the AI tries every single variation to attempt to solve a problem. So what does “solve” mean?

AI agents are reward-seeking machines. They learn to maximise whatever goal you give them, but they don't understand the difference between "fair play" and "cheating." When you tell an agent to win a game or avoid being switched off, it treats every rule as optional and every boundary as a puzzle to solve. The training process actually rewards this behaviour, giving agents points for finding creative solutions, even when those solutions break our expectations.

The reward system is designed to mimic evolution by natural selection, and we are seeing that the AIs that succeed follow the same pattern as living things that have evolved.

Capable agents naturally develop the same basic drives: to stay alive, gather resources, and control their environment. These survival instincts emerge regardless of the agent's original purpose. To the agent, your carefully designed simulation is just another problem to be solved to survive.

What Can I Do To Combat It?

The uncomfortable truth is that you can't fully stop simulation breaking, but you can detect it and respond quickly with proper security tools. Modern AI security platforms like Palo Alto Networks' Prisma AIRS offer comprehensive protection that specifically tackles these agent escape attempts through AI model scanning to detect vulnerabilities, automated red teaming that stress-tests your deployments like real attackers, and runtime protection against prompt injection and data leaks.

The key is building multiple security layers: scan third-party models before deployment, monitor your AI ecosystem continuously, and deploy systems that protect against new threats (i.e. identity impersonation, memory manipulation, etc).

you can't prevent every possible threat, but multiple checkpoints make attacks much harder and more detectable. The goal isn't building an unbreakable cage. It's making escape attempts noisy enough that your automated systems can trigger emergency stops before real damage occurs.

Agents play a team-based hide-and-seek game. Hiders (blue) are tasked with avoiding line-of-sight from the seekers (red), and seekers are tasked with keeping vision of the hiders. There are objects scattered throughout the environment that hiders and seekers can grab and lock in place, as well as randomly generated immovable rooms and walls that agents must learn to navigate. Before the game begins, hiders are given a preparation phase where seekers are immobilised to give hiders a chance to run away or change their environment.